Just got back from the reception at the Organization of American States - many great conversations, and overall a very productive day for me.

Organization of American States

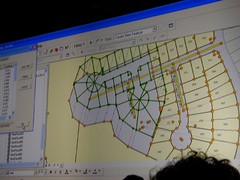

I sat in on a few of the Homeland Security and Emergency Response sessions - we are having an ever expanding role in that arena. Prominent was

IRRIS, from our business partners and fellow Pennsylvania firm,

GeoDecisions. We are currently looking at some possibilities for bringing data from mobile sensor platforms directly into IRRIS, as well as dynamic search and discovery possibilities for sensor data - will be interesting to see how it all unfolds.

Other Homeland Security / Emergency Response sessions touched on

EMMA, as a good model for statewide emergency management with wide stakeholder support.

There was a good session on

NIMS, as it moves forward. The pieces are rapidly falling into place for broader integration and use of incident data, the vision of local-state-federal finally becoming more of a reality. Within NIMS, they mentioned the NOAA plume modeling facility, which is continually run for known sites, utilizing current weather conditions. My wonder is in how well this is or can be tied into available facility data for all of the applicable EPA FRS sites. The NIMS document is currently being revisited for updating, presenting many new opportunities to harmonize incident management efforts across agencies.

It makes a great deal of sense to get ever more stakeholders put together, not just for simple data sharing, but also for lessons learned, sharing of SOPs, models, types of analyses performed, and so on. One report cited as an excellent resource is the 2007 National Academy of Sciences Report,

Successful Response Starts with a Map: Improving Geospatial Support for Disaster Management, which cites a number of areas for improvement - for example that data standards do not yet meet emergency response needs, and that training and exercises for responders need geospatial intelligence built into them.

While we didn't have a booth at this event, I saw at least one fellow SDVOSB exhibitor,

Penobscot Bay Media - they are doing work in LIDAR scanning mounted on a robotic platform. We talked

VETS GWAC a bit... My friends at the EPA booth saw some very brisk business, although they didn't manage to draw Jack Dangermond in. We did catch up with Jack later (will post the picture another time, as it was not my digital camera...)

I got a few minutes to chat with Adena Schutzberg, of

Directions Magazine,

All Points Blog, and many other good things - she was my Teaching Assistant way back when, at Penn State for Spatial Analysis with Dr. Peter Gould. A few encouraging words from her on my blog and the many diverse things I manage to get my fingers into...

The final sessions I got to sit in on - I had a few meetings here and there, which punctuated the day, and unfortunately ended up missing the

GeoLoB discussion, but caught the tail end of the

Geospatial One Stop presentation by Rob Dollison of USGS. He discussed a number of things coming down the pike in the next few months, as build 2.1 gets pushed to production in the next month or so, 2.2 in March-June timeframe, followed by 2.3 - many interesting enhancements, such as search relevance, search booleans, viewing results as a bounding box, a fast base map, 3D viewing, and so on. Exciting stuff. On our end, we are looking for ways to tie our GPT instance at EPA, the GeoData Gateway, into GOS, through the EPA firewall, and using integrated security. We are lucky there to have Marten Hogeweg and the same ESRI team working with us that developed GOS.

One thing that had me wondering on the implementation was "authoritative data sources". Here, the intent would be to present "authoritative data sources" in the search results near the top. Will this be another field in the metadata record? Is it determined by virtue of its' publisher?

At any rate, it was an enjoyable day, saw many friends, had a lot of exciting and productive discussion, and I am looking forward to more of the same tomorrow. But now, I'm ready for bed...

Here's another exiting bit of news - my firm is teamed with CGI Federal on USEPA's Software Engineering & Specialized Scientific Support (SES3) Contract, and we just got word that our team has won EPA's

Here's another exiting bit of news - my firm is teamed with CGI Federal on USEPA's Software Engineering & Specialized Scientific Support (SES3) Contract, and we just got word that our team has won EPA's  As such - we also anticipate we will be looking to grow as a company, and will be looking to hire additional technical gurus with capabilities in data exchange, data management and data flows, particularly if you have prior capabilities and knowledge of EPA's Exchange Network and CDX, and/or geospatial technology.

As such - we also anticipate we will be looking to grow as a company, and will be looking to hire additional technical gurus with capabilities in data exchange, data management and data flows, particularly if you have prior capabilities and knowledge of EPA's Exchange Network and CDX, and/or geospatial technology.

"Liberate The Data"

"Liberate The Data"

Just got home from FedUC and see snow on the ground here-

Just got home from FedUC and see snow on the ground here-