As pressures of economics and tightening budgets, increasing population and infrastructure demands, and limited resources continue to confront states, municipalities, and the nation as a whole, some harsh realities begin to emerge, of how much we can actually, pragmatically accomplish.

As just one example of this, studies of number of vehicle lane miles traveled, compared to number of vehicle lane miles constructed and maintained shows a clear divergence, and sends the message that demand by far has been outstripping supply:

One solution to this would be to just try and keep building roads everywhere – however this is a simplistic, and ultimately unsustainable solution. Certainly, we DO need to stabilize current infrastructure and address some critical physical issues of capacity bottlenecks, and in some instances we do need to improve circulation and flow in existing transportation networks. But we also need to change our thinking, in terms of how we assess, monitor and manage traffic and congestion.

Here, approaches such as use of Intelligent Transportation Systems can provide better visibility into traffic issues and offer solutions toward better management of the transportation network.

Via any number of technologies, such as embedded sensors, cameras, on-board systems and GPS, message boards and other forms of providing traffic advisory data, and hazards monitoring, traffic crises can be averted, congestion can be managed, and traffic rerouted to make optimal use of existing transportation networks. The use cases for embedded technology are numerous – while repairing or replacing our crumbling bridges, we can consider technologies to monitor bridge decks for icing conditions, and so on. We can utilize available traffic data along with spatial, temporal and predictive analysis, e.g. virtual origin-destination studies and other approaches to recognize patterns and trends, toward avoiding traffic jams or even conditions which may be prone to promoting accidents.

Via any number of technologies, such as embedded sensors, cameras, on-board systems and GPS, message boards and other forms of providing traffic advisory data, and hazards monitoring, traffic crises can be averted, congestion can be managed, and traffic rerouted to make optimal use of existing transportation networks. The use cases for embedded technology are numerous – while repairing or replacing our crumbling bridges, we can consider technologies to monitor bridge decks for icing conditions, and so on. We can utilize available traffic data along with spatial, temporal and predictive analysis, e.g. virtual origin-destination studies and other approaches to recognize patterns and trends, toward avoiding traffic jams or even conditions which may be prone to promoting accidents.

As another example, decades of poor, unsustainable planning, zoning, and land development practices have promoted suburban sprawl, pedestrian-unfriendly areas, dependence on cars for even the most mundane of errands, particularly as residential and commercial areas have become separated from each other in artificial models. In some areas, this has been recognized, as we see a return in some locales to “town center” concepts, where residents can find amenities within walking distance. Here again, proper tools and geospatial data are required by planners to correct these planning paradigms on a macro scale to recognize these bedroom community relationships, as well as on a micro scale, for example to best maximize pedestrian travel and optimize these local networks.

Additionally, we need to continue to promote mass transit options, aligned to serve core needs – commuters, shopping, and similar needs, based on observation of current traffic flows. If mass transit becomes enough of a convenience factor, it will continue to be utilized. Similarly, other mass-transit-related infrastructure needs to be examined. Here, spatial and temporal analysis of the network can reap great benefit toward maximizing mass transit networks and flows, their alignment to need (supply and demand for transit) and their efficiency.

These types of solutions are in need all around us - for example, as a regular visitor to the Washington, DC area, I often use their otherwise-excellent WMATA Metro system – however many demand issues and patterns rapidly become evident to even the casual eye– in out-lying areas served by the Metro, most of the parking lots and garages fill immediately in the AM and become deserted after work hours – a sign that commuters from outlying areas. To anyone arriving at, say, 10AM, there’s a good likelihood that some of these parking facilities will be long filled, forcing potential users to travel further before being able to avail themselves of mass transit. Here again, in even just expanding parking capacity, exists opportunity lost to get traffic off of the streets.

These types of solutions are in need all around us - for example, as a regular visitor to the Washington, DC area, I often use their otherwise-excellent WMATA Metro system – however many demand issues and patterns rapidly become evident to even the casual eye– in out-lying areas served by the Metro, most of the parking lots and garages fill immediately in the AM and become deserted after work hours – a sign that commuters from outlying areas. To anyone arriving at, say, 10AM, there’s a good likelihood that some of these parking facilities will be long filled, forcing potential users to travel further before being able to avail themselves of mass transit. Here again, in even just expanding parking capacity, exists opportunity lost to get traffic off of the streets.

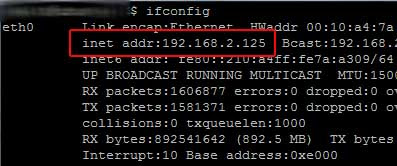

Even these types of things relating to commuting via Metro can also tie into Intelligent Transportation Systems, by providing parking advisories (e.g. saving commuters the grief of trying to find a space in a particular lot when parking may already be full) or by advising pedestrians right at street-level when the next train is arriving or of capacity issues (particularly when it may actually be worthwhile to just walk a few blocks to a different station); or by allowing better means of assessing travel options via web and/or location-aware mobile devices. Here, geospatial approaches can even allow users to get custom travel directions and planning via walking, mass transit, or for handicapped persons, routing via ADA curb cuts, avoidance of stairs, steep inclines, and other useful information toward ensuring safe and reasonable travel, even delivered directly to their phone or other mobile devices.

With HR1 and discussion of massive infrastructure investments on the horizon, we strongly need to consider an integrated strategy and investment for integrated data and analysis, to include remote sensing, geospatial, temporal and others - to go hand-in-hand with hard, bricks-and-mortar infrastructure investments – such that we may better manage the assets. And yes, this could be done independently in dozens of disparate efforts, but would be best leveraged through discourse and technical coordination and information sharing on a broader scale to leverage planning capabilities, modeling, and much more; again, it points up the need for a national vision and strategy for spatial data infrastructure.

If we are going to do this at all, we need to get it right.

While efforts remain local, we need a paradigm shift on many levels- to think beyond our traditional project-by-project approach, and think on a bigger level, to integrate IT into our planning process, as well as integrating it directly into our bricks-and-mortar infrastructure investments, and to better coordinate and leverage investments and efforts to provide this long-term benefit.

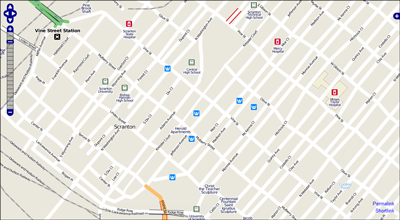

< >

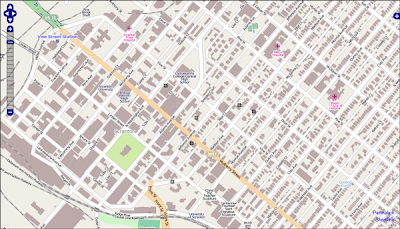

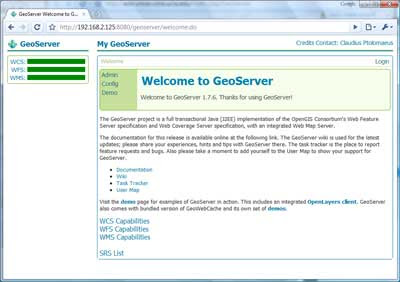

And have quickly been going to something that looks like this:

And have quickly been going to something that looks like this: Here, I've been using orthophoto WMS services and other datasets for correcting streets and railroads, digitizing streams, putting in building footprints, parks, trails and amenities, and in just a short time am rapidly going to a useful and reasonably attractive map (note that this is still in progress). Further, the data can also be reused in a variety of ways, such as in Open Source routing services, using custom styling and symbology and so on.

Here, I've been using orthophoto WMS services and other datasets for correcting streets and railroads, digitizing streams, putting in building footprints, parks, trails and amenities, and in just a short time am rapidly going to a useful and reasonably attractive map (note that this is still in progress). Further, the data can also be reused in a variety of ways, such as in Open Source routing services, using custom styling and symbology and so on.

Here's another exiting bit of news - my firm is teamed with CGI Federal on USEPA's Software Engineering & Specialized Scientific Support (SES3) Contract, and we just got word that our team has won EPA's

Here's another exiting bit of news - my firm is teamed with CGI Federal on USEPA's Software Engineering & Specialized Scientific Support (SES3) Contract, and we just got word that our team has won EPA's  As such - we also anticipate we will be looking to grow as a company, and will be looking to hire additional technical gurus with capabilities in data exchange, data management and data flows, particularly if you have prior capabilities and knowledge of EPA's Exchange Network and CDX, and/or geospatial technology.

As such - we also anticipate we will be looking to grow as a company, and will be looking to hire additional technical gurus with capabilities in data exchange, data management and data flows, particularly if you have prior capabilities and knowledge of EPA's Exchange Network and CDX, and/or geospatial technology. The last several weeks have been quite hectic - busy on a number of fronts, which is a thankful thing, given the economy has slowed down a bit - but here is something quick that I wanted to share - I was awarded "

The last several weeks have been quite hectic - busy on a number of fronts, which is a thankful thing, given the economy has slowed down a bit - but here is something quick that I wanted to share - I was awarded "

Above: Supplied "neweng.av" Tutorial data with choropleth mapping of New England...

Above: Supplied "neweng.av" Tutorial data with choropleth mapping of New England...

Above: "maplewd.av" Tutorial data...

Above: "maplewd.av" Tutorial data... Supported data types: Arc/Info coverages, workspaces images (note .bil image supplied as part of the AV1.0 tutorial data), address coverage... Note also that shapefiles are NOT supported.

Supported data types: Arc/Info coverages, workspaces images (note .bil image supplied as part of the AV1.0 tutorial data), address coverage... Note also that shapefiles are NOT supported.